How to fix Cannot connect to the Docker daemon at unix:///var/run/docker.sock

Last updated

You’re mid-deploy. Maybe spinning up a container for a hotfix or debugging a broken build in your CI pipeline. You hit enter on a command you’ve run a hundred times:

docker run -d my-service

And instead of your container starting, you get hit with this:

Cannot connect to the Docker daemon at unix:///var/run/docker.sock. Is the docker daemon running?

You check Docker’s status, reboot the machine, rerun the command with sudo, maybe even reinstall Docker. Still stuck. You search online only to find 3 million views on Stack Overflow and no clear answer.

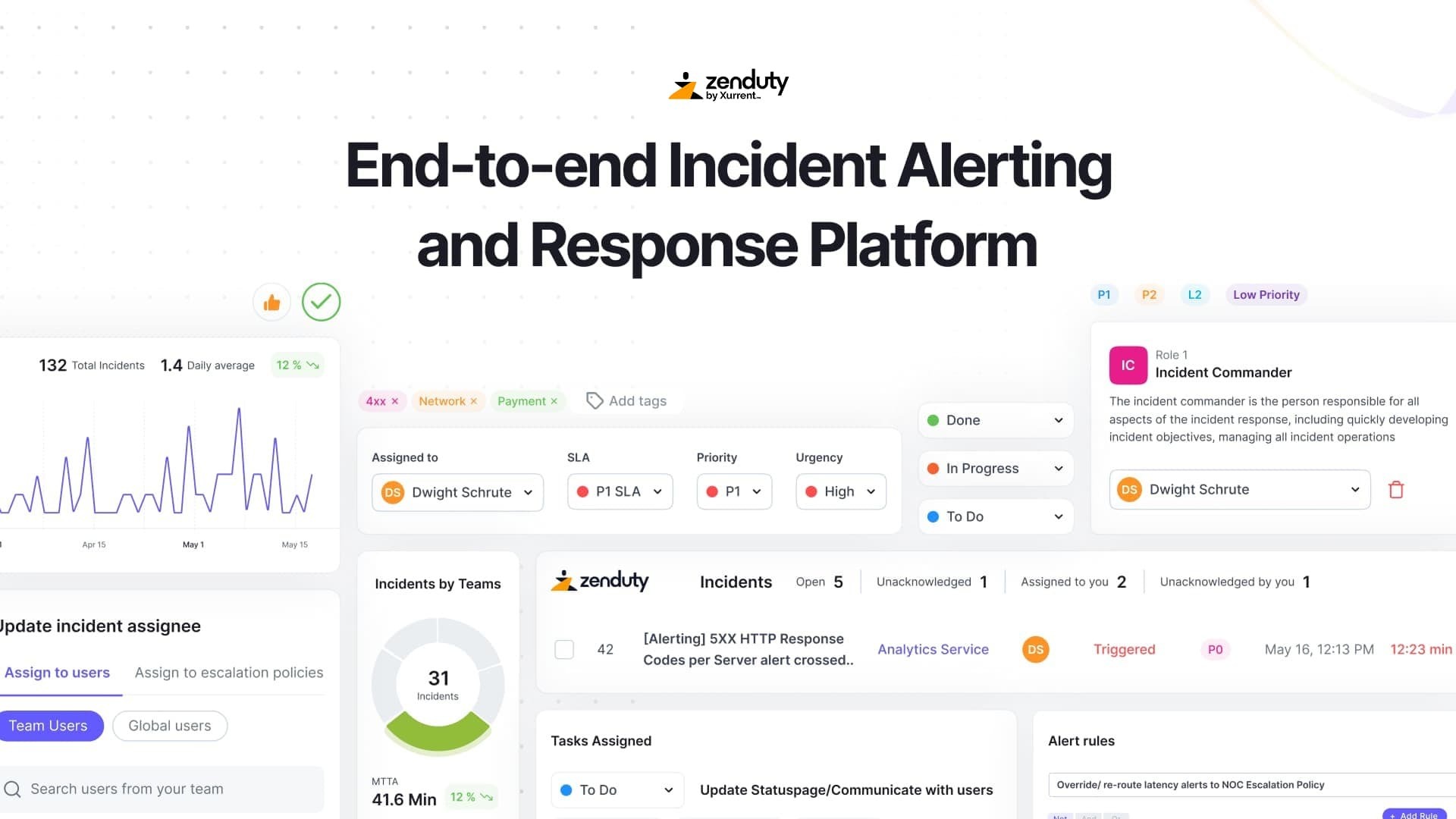

This blog is the answer you were looking for. Before we jump in, Zenduty is made to flag and fix such underlying issues in your production operations and we've recently unveiled our AI features. Give it a spin for free?

SIGN UP FOR A 14-DAY FREE TRIAL | NO CREDIT CARD REQUIRED

Why this error keeps coming back

Unless you understand what’s actually happening under the hood, you’ll end up guessing at fixes that might not apply to your setup. Here’s what we’re going to walk you through:

- What this error really means not what it sounds like

- Why it happens by platform, user context, and installation type

- How to diagnose it with commands that tell you exactly where things are broken

- How to fix it with targeted, environment-specific solutions that won’t break again next reboot

And we’ll also show you how to prevent it from recurring.

🧠 Still debugging logs manually?

ZenAI finds root cause, summarizes incidents, and drafts postmortems — instantly.

Try ZenAI RCAIf you’re skimming, here’s a quick checklist. You’ll find detailed breakdowns and use cases for each of these in the sections below.

Before we jump into that, here's an interesting read on how docker was built. We had a chat with docker's co-founder, Solomon Hykes and he shared the story behind docker.

A chat with docker's Co-founder, Solomon Hykes

Common root causes

Quick diagnostic commands

docker context inspect --format '{{ .Endpoints.docker.Host }}' # Check endpoint

ls -l /var/run/docker.sock # Check socket + permissions

systemctl status docker # Check daemon state

echo $DOCKER_HOST # Check for misconfigured env varWe’re going to unpack the full meaning of Cannot connect to the Docker daemon at unix:///var/run/docker.sock, line by line. Because once you understand the moving parts behind it, the fix becomes obvious.

Let’s dig in.

“Cannot connect to the Docker daemon”

This line doesn’t tell you the daemon is stopped. It just means the Docker CLI tried to talk to the daemon, and it failed.

That’s it.

It didn’t inspect processes. It didn’t ping dockerd. It just tried to open a communication channel and got nothing in return.

So this part of the message should be interpreted as:

"I tried talking to Docker. Nobody answered."

It’s your job to find out why no one’s answering.

“at unix:///var/run/docker.sock”

This part is crucial. It tells you how the CLI attempted to talk to the daemon: via a Unix domain socket.

That’s not a regular file. It’s a special kind of IPC (inter-process communication) mechanism used by Docker to receive commands from the CLI.

This file:

/var/run/docker.sock

…is the main bridge between docker (the client) and dockerd (the daemon). When that bridge is broken, everything stops working.

If this file doesn't exist or your user can’t access it, docker won’t work, even if dockerd is running in the background.

“Is the Docker daemon running?”

This is the red herring.

The CLI doesn’t know. It’s just guessing. That question is Docker's way of saying:

"I don’t have enough information to know what failed. It might be that dockerd isn't running. It might be that you’re pointing at the wrong socket. It might be a permission issue."

It’s telling you to check both ends of the connection: the daemon, and the socket.

This one message can appear in all of the following cases:

Figure out which one you’re hitting

This is what separates pasting fixes from solving the problem:

Ask yourself these questions:

Is the daemon running at all? sudo systemctl status docker

Is the socket there and owned properly? ls -l /var/run/docker.sock

Is my CLI pointing at the right context?docker context ls

docker context inspect --format '{{ .Endpoints.docker.Host }}'

Is the environment lying to me?echo $DOCKER_HOST

If any of these fail, you’ve got your answer.

How to fix the docker daemon error — by platform

Let’s cut to the chase. You're seeing:

Cannot connect to the Docker daemon at unix:///var/run/docker.sock. Is the docker daemon running?

🐳 Docker daemon crashed again?

Zenduty watches the dockerd socket and alerts you before things break.

Monitor Docker ReliabilityHere’s how to fix it, based on where and how you're running Docker.

A. Linux (Ubuntu, Debian, Arch, etc.)

Problem 1: Docker daemon isn’t running

Fix:

sudo systemctl start docker

Make it persistent on boot:

sudo systemctl enable docker

Check status:

sudo systemctl status docker

Problem 2: Missing socket permissions

Fix: Add your user to the docker group:

sudo usermod -aG docker $USER

newgrp docker # OR log out and back in

Then try:

docker ps

Problem 3: Snap install quirks

Snap isolates services, so don’t assume it's the same as apt-based installs.

Check daemon status:

snap services docker.dockerd

Restart daemon:

sudo snap restart docker

B. macOS (Docker Desktop)

Problem 1: Docker Desktop isn't running

Docker on macOS runs in a virtualized Linux environment (via HyperKit or Apple’s native hypervisor). If Docker Desktop isn’t running, nothing is.

Fix: Open Docker Desktop. Wait for "Docker Engine Running" to appear in the GUI.

Problem 2: CLI is pointing at the wrong context

Fix:

docker context use desktop-linux

Verify:

docker context ls

If you installed Docker CLI via Homebrew and use Docker Desktop, be careful as you might have conflicting binaries or configs.

Problem 3: You’re using sudo, but your socket is under your user session

Docker Desktop’s socket lives in your user’s home directory under ~/Library/Containers/....

Running sudo docker ... bypasses that and causes connection failures.

Fix: Just don’t use sudo with Docker on macOS.

C. WSL2 (Windows Subsystem for Linux)

Problem 1: Docker Desktop isn’t integrated with your WSL distro

Fix:

- Open Docker Desktop

- Go to Settings > Resources > WSL Integration

- Enable your distro (e.g., Ubuntu-20.04)

Problem 2: No systemd support in WSL2

Older WSL2 distros didn’t support systemd, so running:

sudo systemctl start docker

...won’t work.

Instead, you may need to start the daemon manually:

sudo dockerd

Bonus: Check if systemd is supported:

ps -p 1 -o comm=

If you see init instead of systemd, you're on a pre-systemd WSL.

Problem 3: Wrong context or stale environment

Check your context:

docker context ls

docker context use defaultClear stale env:

unset DOCKER_HOST

D. CI/CD Pipelines (GitLab, GitHub Actions, Jenkins, etc.)

Problem 1: Docker-in-Docker not set up correctly

Fix: In your container spec or job:

services:

- docker:dind

variables:

DOCKER_HOST: tcp://docker:2375/

DOCKER_TLS_CERTDIR: ""Or when running manually:

docker run -v /var/run/docker.sock:/var/run/docker.sock ...Problem 2: Permissions in ephemeral environments

Make sure the docker.sock mount is:

- Present

- Not root-owned without permission

- Actually points to a live daemon

Check inside your CI container:

ls -l /var/run/docker.sock

If it’s missing or not a socket you’re not mounting it correctly.

E. Remote Docker Daemons (SSH, TCP)

Problem: CLI is trying to connect to a non-existent or misconfigured remote host

Fix 1: Inspect your Docker context:

docker context inspect --format '{{ .Endpoints.docker.Host }}'

If it’s something like ssh://... or tcp://... — that may be the issue.

Fix 2: Reset to local:

docker context use default

unset DOCKER_HOSTSanity check your environment

# See current docker host

echo $DOCKER_HOST

# Check what context you're using

docker context ls

# Check what your CLI is targeting

docker context inspect --format '{{ .Endpoints.docker.Host }}'

# Look at the socket itself

ls -l /var/run/docker.sock

Preventing Docker Daemon Failures from Disrupting You Again

Fixing the error is one thing. Making sure it doesn’t happen again or worse, silently break production is what separates quick patchwork from real platform reliability.

🚨 Stop guessing what's broken

Zenduty auto-triages incidents before they impact your users.

Start Free TrialHere’s how to bulletproof your Docker setup.

1. Make Docker Start Automatically on Boot (Linux)

If your daemon isn’t running after every restart, it’s probably not enabled to start on boot.

Fix:

sudo systemctl enable docker

You can verify:

sudo systemctl is-enabled docker

Set it and forget it.

2. Add Your User to the Docker Group (and Verify It)

One of the most persistent sources of docker.sock failures is permission-related. If you’ve been relying on sudo docker, you're either masking a deeper problem or limiting Docker usability across tools.

Fix:

sudo usermod -aG docker $USER

newgrp dockerVerify:

groups | grep docker

Still doesn’t work? Check:

ls -l /var/run/docker.sock

If it says root docker, and your user isn’t in docker, you’ll keep getting denied.

3. Use a Consistent Docker Context

Context confusion is real, especially if you’re bouncing between Docker Desktop, remote daemons, or CI pipelines. Docker CLI persists context across sessions.

Reset to default:

docker context use default

Inspect:

docker context inspect --format '{{ .Endpoints.docker.Host }}'

Clear misleading env vars:

unset DOCKER_HOST

Optional: Add to your shell config:

# ~/.bashrc or ~/.zshrc

unset DOCKER_HOST

docker context use default

This saves hours of head-scratching later.

4. Avoid Multiple Docker Installations (Desktop + CE)

Running both Docker Desktop and Docker CE on the same machine? Prepare for chaos.

- You’ll have multiple daemons.

- Multiple sockets.

- Multiple docker binaries fighting for control.

Do this instead:

- Use Docker Desktop or Docker CE, not both.

- If you must use both (e.g. macOS with Linux CI), isolate contexts carefully and never mix root/non-root usage.

5. Add Daemon Health to Your Monitoring Stack

Most monitoring setups check the health of containers. Very few check whether the daemon itself is alive.

This is what silently kills your pipelines, your scripts, and your dev machines.

Quick hack (Bash health check):

#!/bin/bash

if ! docker info > /dev/null 2>&1; then

echo "Docker daemon not responding!"

exit 1

fiSchedule this with cron or a lightweight systemd timer.

Prometheus user? Use cAdvisor or node_exporter + a process_name check on dockerd.

6. Watch the Socket File

The socket itself can disappear during a crash or shutdown and if it does, docker commands will fail with zero visibility unless you’re watching it.

Basic health probe:

[[ -S /var/run/docker.sock ]] || echo "Missing Docker socket"

Use this in local scripts or as a pre-check in CI jobs.

Pro Tip: Set DOCKER_HOST Explicitly in CI Pipelines

In CI, you often rely on Docker-in-Docker or shared sockets. Don’t assume defaults.

Example: GitLab CI

variables:

DOCKER_HOST: "tcp://docker:2375"

DOCKER_TLS_CERTDIR: ""Explicit is always better than implicit.

7. Document Post-Install Steps in Your Infra Code

Many errors happen when team members forget to add their user to the docker group, enable systemd units, or use the wrong context.

If you manage infra via Ansible, Bash, or Terraform, bake in these steps:

- usermod -aG docker

- systemctl enable docker

- docker context use default

- unset DOCKER_HOST

Standardization beats tribal knowledge.

Why This Error Matters (and What It Teaches About Reliability)

Let’s zoom out for a second.

A broken docker.sock or silent dockerd failure might seem like a minor local dev issue. But for modern teams, especially SREs, DevOps engineers, and platform owners, Docker is foundational.

If the Docker daemon goes down, or if communication with it breaks, everything built on top of it grinds to a halt:

- CI pipelines fail silently

- Infra-as-code steps time out

- Deployments hang with no logs

- Local development becomes impossible

- On-call engineers waste hours diagnosing it mid-incident

This is a reliability issue.

What This Error Reveals

It’s not just about Docker. This error shows what happens when:

- Core system components aren’t monitored

- Post-install hardening steps are skipped

- Daemon health is assumed, not validated

- User permissions and contexts aren't standardized

You don’t need 10,000 containers to care about this. One missed restart in prod is enough.

What High-Reliability Teams Do Differently

Top engineering orgs:

- Treat Docker like any other stateful service: monitored, restarted, alerted on

- Automate post-install steps: no tribal setup, no flaky configs

- Avoid dual installs: CE vs Desktop vs Snap — pick one and standardize

- Embed daemon checks into pipelines: Docker not available? Fail early with clear output

- Document context management: no more why is this command hitting a remote machine from staging?

From One-Off Fix to Operational Pattern

Every time an error like this shows up, you have two options:

- Patch it and move on

- Patch it, learn from it, and prevent the next one

This blog was written for teams that choose #2.

You now know:

- What the Docker daemon error actually means

- How to fix it across environments (Linux, macOS, WSL, CI)

- How to prevent it from recurring

- And how to make it part of your broader reliability toolkit

Frequently Asked Questions

This error means the Docker client on your machine can't talk to the background daemon process (dockerd). It may not be running, you might lack permissions, or it could be looking in the wrong place (e.g. wrong socket or context).

Run sudo systemctl status docker on Linux. If you see "active (running)," it's up. On macOS or Windows with Docker Desktop, open the Docker Desktop app and look for "Engine Running" in the bottom corner.

Use sudo systemctl start docker on Linux. On macOS or Windows, just launch Docker Desktop. In WSL2, you may need to run sudo dockerd if systemd isn't supported.

If your user isn't in the docker group, the system denies access to the Docker socket (/var/run/docker.sock). Add your user with sudo usermod -aG docker $USER, then log out and back in.

It's a Unix socket file used by Docker CLI tools to communicate with the Docker daemon. If it doesn't exist, or you don't have permissions to use it, Docker commands will fail.

Docker contexts define where the Docker CLI should send commands — local or remote. If the current context points to the wrong socket or remote host, you may get connection errors. Use docker context ls and docker context use default to manage it.

If Docker wasn't set to start on boot, the daemon won't run automatically after a reboot. Use sudo systemctl enable docker to ensure it starts up next time.

In CI environments like GitLab or GitHub Actions, the Docker daemon might not be initialized or exposed. You may need to use Docker-in-Docker (dind), set DOCKER_HOST, or mount the socket manually.

You can script a simple health check using docker info, or monitor the Docker process and socket file. For production systems, integrate daemon health into observability tools or on-call platforms.

You can't prevent every edge case, but you can eliminate 95% of them by setting up Docker properly (correct permissions, startup settings, context defaults), monitoring the daemon, and using reliable workflows that surface failures early.

Rohan Taneja

Writing words that make tech less confusing.