How Dev and SRE Teams Split Work

Last updated

Every engineering org, at some point, has to draw a line between what developers own and what SREs take care of. That line never stays fixed and it keeps shifting with org maturity, platform investments, and how comfortable people are with risk and ownership. Understanding how to prioritize incidents effectively through an incident priority matrix can provide clarity.

Most of us have seen both extremes. In one setup, devs push code and expect SREs to deploy, monitor, and catch the fallout. In the other, devs are deep in Terraform and Helm values, shipping straight to prod with no safety rails. Both can work, and both can become operational nightmares if the boundaries are unclear.

What makes this conversation tricky is that there's no one right model. But there are patterns that work better depending on your architecture, tooling, and how your teams think about reliability.

This post breaks down the real-world models we see across engineering orgs. These are patterns pulled straight from how teams actually ship and run software. Whether you’re in a dev-heavy org with no SREs or part of a structured platform team, there’s something in here that will help you understand where you are and where you could go next.

Let’s start at one end of the spectrum where devs own everything, infra included. You might think it's chaos. Sometimes it is. But it also teaches some sharp lessons about scaling ownership.

When devs own it all, top to bottom

There are orgs where developers write the business logic and own the infrastructure. They write their own Terraform, provision cloud resources, set up IAM, deploy through CI/CD pipeline they configure themselves, and handle on-call rotations for their own services.

This model sounds like autonomy and it is, until it becomes overhead. When every team builds its own infra stack from scratch, you get fragmentation fast. Different tagging strategies, inconsistent alerting, random decisions around retries and timeouts. Then you hit your first incident and realize your teams aren't aligned on severity levels or escalation paths.

This kind of setup is common in early-stage startups. You optimize for speed and assume your engineers are full stack, platform included. It works until the blast radius grows and tooling debt starts blocking reliability. Then you either hire platform engineers or you burn out the folks trying to carry both app and infra context in their heads.

The upside is that teams get firsthand exposure to production concerns. They learn how resource limits work, how to define SLOs, what a real pager looks like. That knowledge becomes valuable once you bring in SREs or centralize operations because now the devs speak the same language.

But without a shared contract around how infra is built and run, this model creates more risk as systems scale. You get drift. You get one-off snowflake deploys. You get teams rolling their own Prometheus exporters because nobody told them there's already a standardized one in use.

Which brings us to the opposite extreme. A setup where SREs own the infra and devs stay focused on code. Let’s walk into that next.

When SREs hold the production path

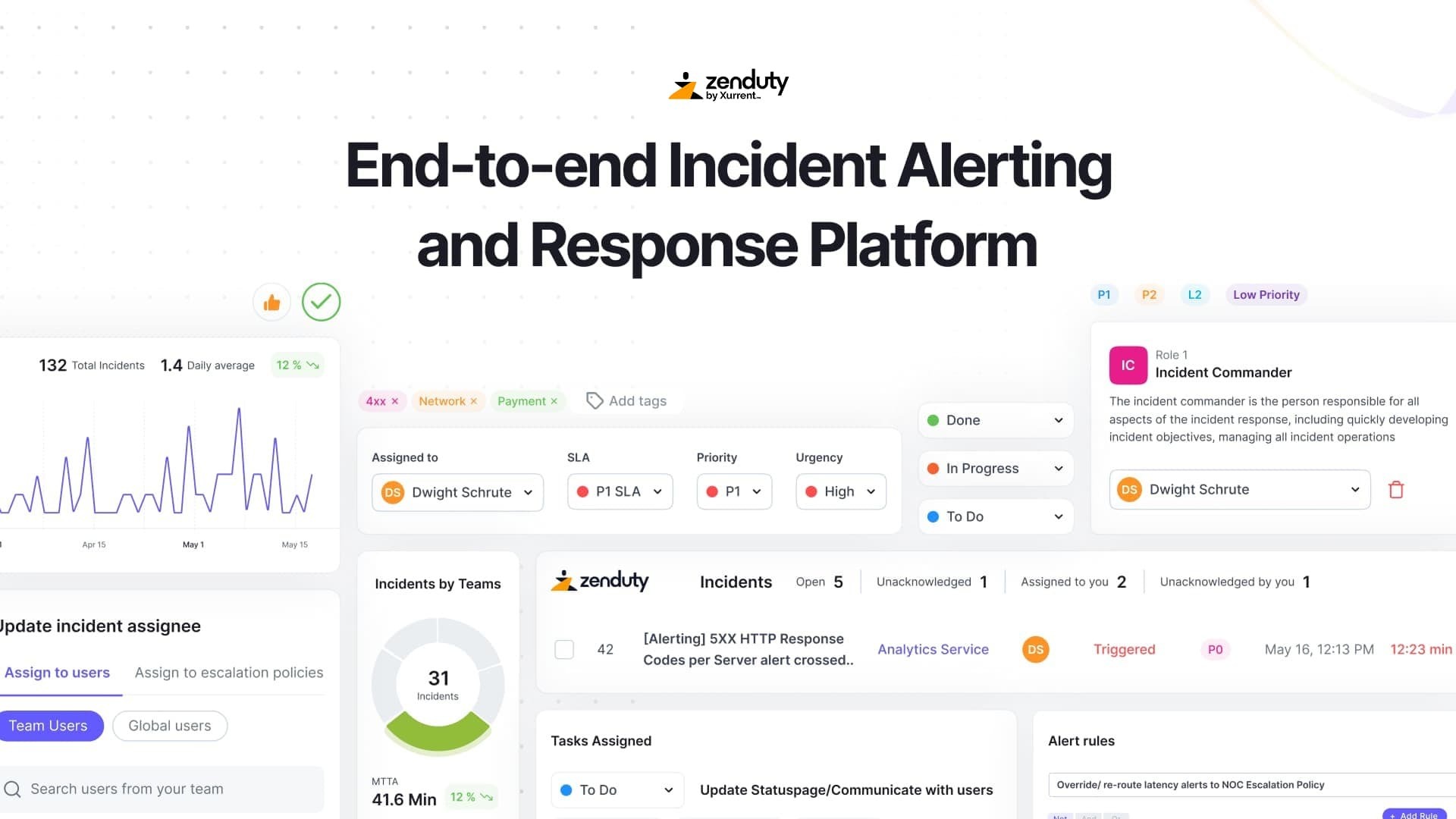

In some orgs, the deployment pipeline stops at the SRE team. Devs write code and validate in lower environments, but anything that touches production goes through ops. These teams manage infra, enforce deployment rules, own monitoring, and use dedicated incident management software while handling on-call duties.

This structure works best when the platform is complex or regulated. You need controlled rollouts, hardened pipelines, and clear separation of duties, but the tradeoff is clear. SREs become a critical dependency in every release cycle and the more services you have, the more this bottlenecks.

A gap forms when SREs debug systems they didn’t design, and devs become shielded from prod signals due to poor observability tools. This slows triage and eliminates vital feedback loops for reliability improvements.

You can make this model work, but only if you layer in automation and give devs observability into their own code in production. Otherwise, you’re just centralizing toil.

Next, let’s look at what changes when SRE teams shift their focus from being the deploy gate to building the platform as a product.

When platform becomes the product

This is where the shift happens. SRE isn’t a ticket queue or an on-call buffer. It’s engineering work that makes product teams faster and more reliable without needing permission.

Instead of handling every deploy, SREs build reusable pipelines. Instead of triaging alerts, they create and maintain observability tools that teams leverage. They define standards, write Terraform modules, ship opinionated Helm charts, and maintain templates enforcing good practices.

When effective, devs own deployments and on-call, while SREs focus on system-wide engineering challenges. For this model to work, you need trust, strong documentation, and clear pull request review processes.

But for this to work, you need trust and documentation. You need feedback loops between platform and product. And you need a culture that expects teams to own their path to prod.

From here, the next step is less about ownership lines and more about collaboration. Let’s talk about what happens when you start blending the boundaries between SRE and dev.

When lines blur and cross-pollination works

Some of the best outcomes happen when there’s overlap. Devs who understand how their services behave in prod. SREs who can jump into app code during an incident and not slow the team down. That blend kills silos before they form.

Mature teams often share Slack channels, joint retros, and co-authored incident post-mortem reports. Developers reason about retry budgets and alert thresholds, while SREs understand feature flags and failure domains. Pairing during incidents creates shared mental models and improved incident response, supported by a clear incident response team structure.

You don’t need everyone to know everything. But the more operational context devs carry, and the more engineering time SREs spend upstream, the fewer surprises show up in incident review.

Next up, let's talk about how different orgs structure this collaboration and where things can break if the shape of the team doesn’t match the shape of the work.

When org structure shapes reliability

The way teams are organized changes how work gets done. Some companies split responsibilities by layer. One team owns the AWS account setup, another handles Kubernetes, another runs observability. Dev teams consume all of it through shared tooling.

This model works when every piece is modular and stable. But if one layer changes too often or lacks documentation, everything above it slows down. Dev teams get blocked not because they lack skills, but because they’re waiting on ownership to catch up.

Others embed SREs into product squads, shortening feedback loops and sharing SRE vs. SWE knowledge. Another model conditionally supports dev teams based on criticality. Each approach has trade-offs: shared services need excellent documentation, embedded SREs scale poorly, conditional support relies on teams already knowing best practices and good SRE tools.

Let’s look at what happens when the setup breaks. Because it does. And you can feel it when it starts to strain.

When the model breaks down

You know it's broken when devs lose prod access but still get alerts, or when SREs debug without context. Repeated themes in incident analyses, ineffective escalation, or confusing incident management KPIs signal deeper issues.

Another sign is when every team builds their own version of the same thing. Different CI pipelines, custom Terraform layouts, bespoke dashboards. Without shared tooling, every fix becomes one-off glue.

And then there’s on-call. If your escalation tree depends on who last touched the YAML, your incident process is broken. If alerts route to the wrong team because no one owns the service map, your MTTR will show it.

They’re signals that the model needs a reset. You don’t need new workflows or extra tools. You need clearer ownership and infra that doesn't reinvent itself team by team. You fix this by re-aligning what teams own and how they collaborate.

Next, let’s cut through the noise and get to what good SRE actually looks like. Because this is where the role either adds leverage or gets buried.

When SRE work actually matters

The impact of SRE shows up when you're not in the room. When deploys roll out cleanly without needing a Slack ping. When alerts are tuned enough that people actually trust them. When teams solve their own incidents because the tooling makes that possible.

SRE isn’t measured by how fast you acknowledge an alert, but by how rarely those alerts fire in the first place. The real value is in building systems that scale predictably, not just in reacting faster when they fail.

That means investing in the boring parts. Improving service ownership metadata. Codifying SLIs into CI pipelines. Turning tribal ops knowledge into documented playbooks. Automating the glue that connects observability, deploys, and alerts into something teams can use with confidence.

Good SREs spend more time writing reusable infra code than manually patching servers. They review incident patterns, not just incident timelines. They shape system behavior by shaping the defaults.

The best SRE work fades into the background because the tools, patterns, and automation keep teams from needing a rescue in the first place.

When you're ready to shift the model

Getting ownership right means setting boundaries in code and backing them with habits that last beyond org charts. Use standard templates for deploys, observability, and alerting so teams don’t have to reinvent the basics. Make ownership visible in your tooling and reflect it in your escalation paths.

Platform teams need to ship defaults that teams can trust, not just options. Embed expectations into pipelines and infra modules so consistency happens by design, not review. Reliability scales when teams get fast feedback and don’t need to file tickets to stay within guardrails.

SREs should stay close to where the design decisions happen, not just where things break. That’s how you influence architecture, catch problems early, and shift teams into owning more without dropping quality.

Let’s wrap this up by stepping back and looking at the bigger picture. Because good models only work when reliability is a shared goal across the org.

When reliability becomes a shared goal

The best models are the ones where reliability isn’t owned by a single team but built into how the org works. Devs own their services, SREs build the systems that support them, and both learn from incidents, not just resolve them. Reduced downtime and consistent uptime follow from a shared responsibility culture.

This only happens when leadership treats reliability as a product quality issue, not just an ops metric. You don’t scale by adding more responders. You scale by reducing friction and raising the baseline for every team.

If your platform makes it easy to follow best practices and your culture reinforces shared accountability, you won’t need to define hard boundaries between dev and SRE. The work will split itself the way it needs to.

Foster a culture of reliability within your organization

If your teams are already thinking this way, Zenduty helps make it real. From automated incident routing and actionable alerts to post-incident workflows that actually drive improvement, Zenduty gives your teams the tools to take full ownership without burning out. Try it with your platform or product team and see how it changes your incident response.

14-DAY FREE TRIAL | TRY ZENAI | AI THAT THINKS LIKE YOUR BEST SRE

Rohan Taneja

Writing words that make tech less confusing.