The Hidden Cost of Alert Fatigue for SREs

Last updated

You remember your first alert.

What triggered it. What time it came in. How your heart jumped a little as you reached for your laptop in the dark.

You don’t remember your 247th alert.

And that’s where alert noise becomes a silent risk.

For engineers on-call, alerts are meant to be signals that are clear, actionable, and trustworthy. But over time, when the system starts shouting for everything, it stops being helpful. That’s how we end up with alert fatigue: a state where even the critical things blend into the noise.

Most engineers care deeply about uptime, safety, and doing the right thing. But the human brain isn’t built for constant false urgency. Over-alerting it teaches teams to ignore the very system that’s meant to protect them.

We’ve seen this across organizations of every size. And the pattern is clear: when alerting is noisy, incident response gets slower, reliability suffers, and engineers burn out.

What Is Alert Fatigue

Alert fatigue happens when alerts lose their urgency. It’s not about laziness or apathy. It’s about what happens when systems treat every anomaly like a crisis, and expect humans to respond perfectly every time.

When the noise is constant, we adapt. We triage mentally. We mute channels. We tell ourselves “I’ll check that later.” And eventually, later never comes.

Why Alert Fatigue Happens

Here are a few common reasons alert fatigue takes root:

1. Too Many Alerts for Non-Issues

Monitoring tools often default to aggressive thresholds. A small CPU spike, a momentary network blip, or a failed ping to a staging box can trigger the same kind of alert as a real outage. When you’re woken up for noise, you stop trusting the system to wake you for signal.

2. Duplicate Alerts from Multiple Tools

One underlying issue might trigger alerts from logs, infra, APM, and synthetic monitors. Without deduplication or intelligent correlation, the same incident can feel like ten different fires.

3. No Clear Ownership or Action Path

Even well-meaning alerts can fail when they don’t come with clarity. If an engineer sees an alert and doesn’t know whether they’re responsible, what it means, or what to do next, the easiest thing to do is nothing.

4. Lack of Feedback Loops

If alerts never get reviewed or updated, bad alerts stick around. That one alert you told yourself you'd fix after the sprint? It's still waking someone up months later. And now it’s just part of the noise.

How Noisy Alerts Hurt More Than Sleep

When alerts are noisy, they chip away at the reliability of your entire system—technical, human, and cultural.

Here’s how that plays out.

Operational Impact

When teams are flooded with alerts, real incidents get buried. Engineers spend valuable time sifting through logs and false positives. During an actual outage, response slows because confidence is already low. You start second-guessing which alerts matter.

Over time, this increases mean time to acknowledge (MTTA) and mean time to resolve (MTTR). And it’s not because your team lacks skill. It’s because your system trained them to hesitate.

Human Impact

Behind every alert is a human being and often the same few engineers, week after week. Constant interruptions erode focus, sleep, and confidence. They create anxiety even when nothing’s wrong. Eventually, they lead to detachment. The alert goes off and no one flinches. Not because they’re lazy, but because they’ve been conditioned to expect noise.

This is how burnout starts. Not in fire, but in friction.

Cultural Impact

Alert fatigue spreads into team habits. Teams stop reporting false alerts because "that’s just how it works." Incident reviews stop asking why the alert fired. And new hires learn quickly: you don’t fix the alerts, you just live with them.

Over time, this becomes your default. A noisy alert system teaches engineers to ignore problems. And that’s when reliability really starts to slide.

What Healthy Alerting Looks Like

A healthy alerting system feels like a teammate you can rely on. It doesn’t interrupt you over nothing. It respects your time. And when it speaks up, you know it matters.

When engineers trust their alerts, everything improves. Incidents get resolved faster, sleep is protected, and teams build more confidently.

Here’s what that kind of alerting system usually looks like.

1. It’s Selective, Not Silent

Healthy alerting isn’t about shutting things off. It’s about turning up the signal and cutting back on the noise. You want alerts that catch real problems, not every blip.

If something self-heals in five minutes and has no customer impact, it probably doesn’t need to wake someone up. The goal is fewer distractions, not fewer detections.

2. It Brings Context, Not Just Pings

An alert should help you understand what and why. That could mean linking to dashboards, showing recent logs, or even just naming the impacted service.

Context saves time. And in the middle of an incident, it saves a lot more than that.

3. It Knows Where to Land

Every alert should go to someone who’s responsible or knows who is. No one wants alerts floating around in shared channels, waiting for someone to feel guilty enough to reply.

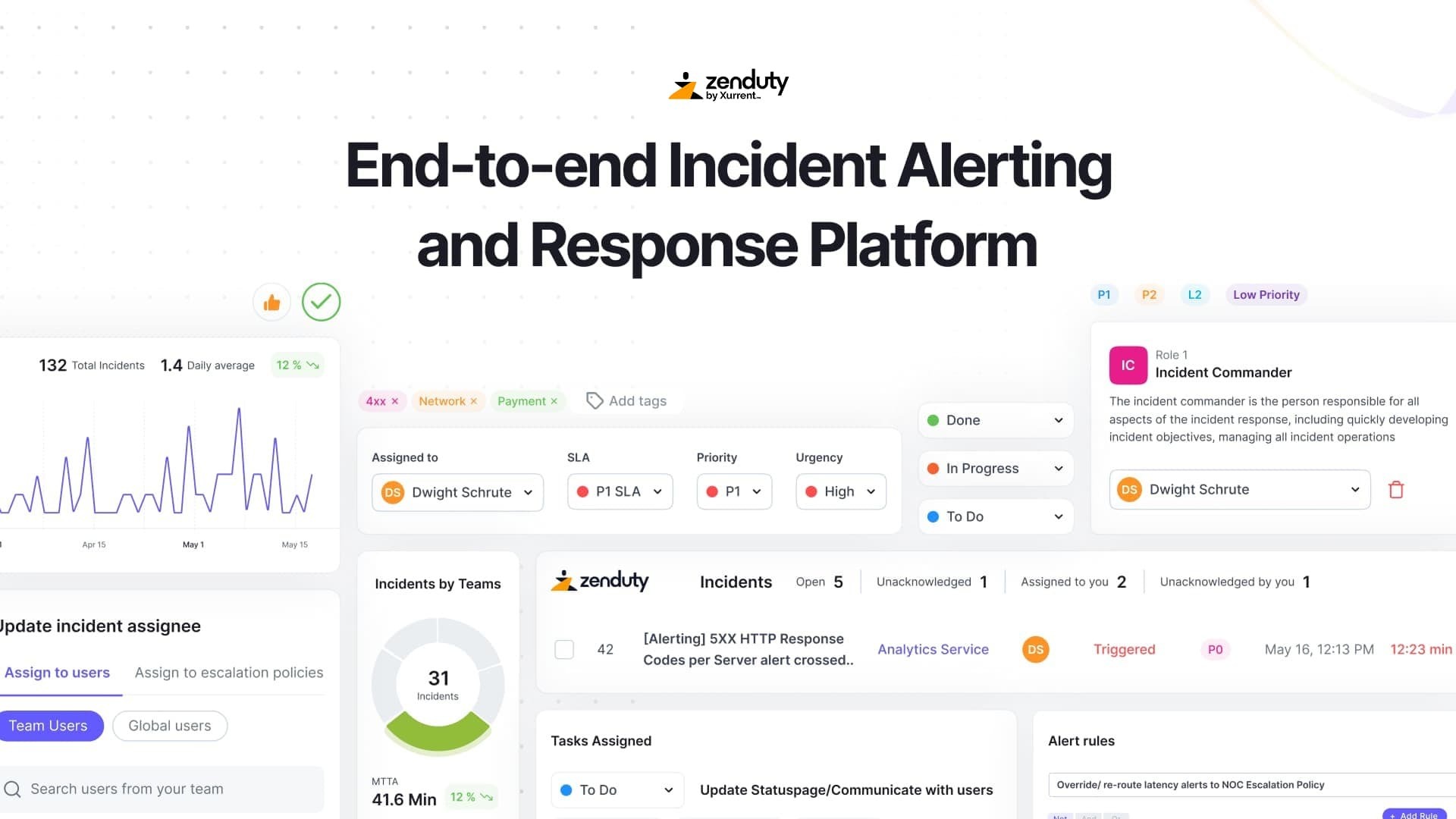

This is where Zenduty helps. We give teams the structure to route alerts to the right place, escalate when needed, and keep things visible without being noisy. Not by taking control away, but by supporting how teams already want to work.

4. It Evolves

The best alerting systems tune thresholds. They retire outdated checks. They bring alert feedback into postmortems. (Try ZenAI that write postmortems for you)

When teams reflect on what alerts were helpful and which ones weren’t, they start to build a system that actually works for them.

Practical Ways to Start Reducing Noise Today

Quieting down alerts require you to notice where the noise is coming from and make steady improvements from there.

Here are some simple, practical steps that can help.

Start by Listening to the Team

One of the fastest ways to identify noisy alerts is to ask the people who are responding to them. Which ones get ignored? Which ones cause frustration? Which ones come in too often or too late?

This kind of feedback is usually hidden in Slack threads or mentioned in passing during standups. Bring it into the open and make it part of the process.

Group and Deduplicate Where You Can

If a single issue sets off a dozen alerts from different systems, you’re getting more confusion. Look for ways to marge alerts that are related, or to suppress secondary alerts when a primary one is already active.

Make Ownership and Escalation Clear

If someone isn’t sure an alert is theirs, chances are they’ll hesitate or ignore it. That’s not a lack of care. It’s a gap in clarity.

Make sure alerts are routed to the right rotations or teams. And when things escalate, it should happen through a process that’s predictable and respectful of everyone’s time.

Zenduty lets you design and automate this flow, without having to duct-tape it together from scratch.

Use Severity to Protect Attention

Not all alerts are equal. A slight increase in latency doesn’t need the same urgency as a database going down. Your alerting system should reflect that.

Setting severity levels helps the team respond with the right mindset. Urgent issues get immediate focus. Lower-severity alerts can be reviewed during working hours. This keeps your most important signals from getting drowned out.

Schedule Regular Reviews

Teams change. Infrastructure evolves. What was useful six months ago might be outdated today.

Build in time to review alerts. Ask if they’re still needed, still accurate, and still going to the right place. This doesn’t have to be a huge effort. Even a short monthly check-in can prevent a lot of future frustration.

Foster a Proactive, Collaborative Approach to Reliability

At Zenduty, we’ve seen teams make small changes that had a big impact. It often starts with a single alert review, or a rotation check, or a conversation in a retro that wasn’t happening before. One step, then another.

And it adds up.

Every time you tune an alert, remove duplication, clarify ownership, or just listen to what the team is telling you; you’re making things better.

Not just for the system. For the people who run it.

14-DAY FREE TRIAL || NO CC REQUIRED

Rohan Taneja

Writing words that make tech less confusing.